GRC Destroyer #3: How should we be GRC'ing AI / LLM??? Things we can do today. Building an AI Compliance Program.

AI hype article! How can we be experts in risk assessing and auditing something we don't understand? What are the current regulations? I make my takes from what's available to us today.

2 Minute Analysis of OWASP TOP 10 for LLM

OWASP put together their top 10 vulnerabilities for LLM and a guide for how to prevent them. This is our starting point IMO. Check the resources below:

Full Guide: Good for the deep divers

Slide Guide: WAY chiller - I use this to understand

In the slide guide we have the top 10 vulnerabilities with a brief description, examples, prevention activities, and attack scenarios of each.

LLM01: Prompt Injection

LLM02: Insecure Output Handling

LLM03: Training Data Poisoning

LLM04: Model Denial of Service

LLM05: Supply Chain Vulnerabilities

LLM06: Sensitive Information Disclosure

LLM07: Insecure Plugin Design

LLM08: Excessive Agency

LLM09: Overreliance

LLM10: Model Theft

I recon that, if your company is implementing any kind of LLM capability in the product, ya’ll better have a documented gap assessment for these controls. Do I know how to audit these 10 things??? Full transparency, absolutely not, but getting internal interviews documented for each vulnerability with the dev team is a start. Reviewing the attack scenarios and prevention activities with the dev team may be the first place to understand how to effectively evidence whether there’s a process for prevention.

These are technology based vulnerabilities. These are things that need to be implemented to actually protect your company from the threats of deploying LLM into the environment. This is not the same thing as compliance and regulation around AI/LLM. There is a broader regulatory framework that encompasses the wider set of ethics and governance we’ll dive into below.

Where We’re At With Regulation

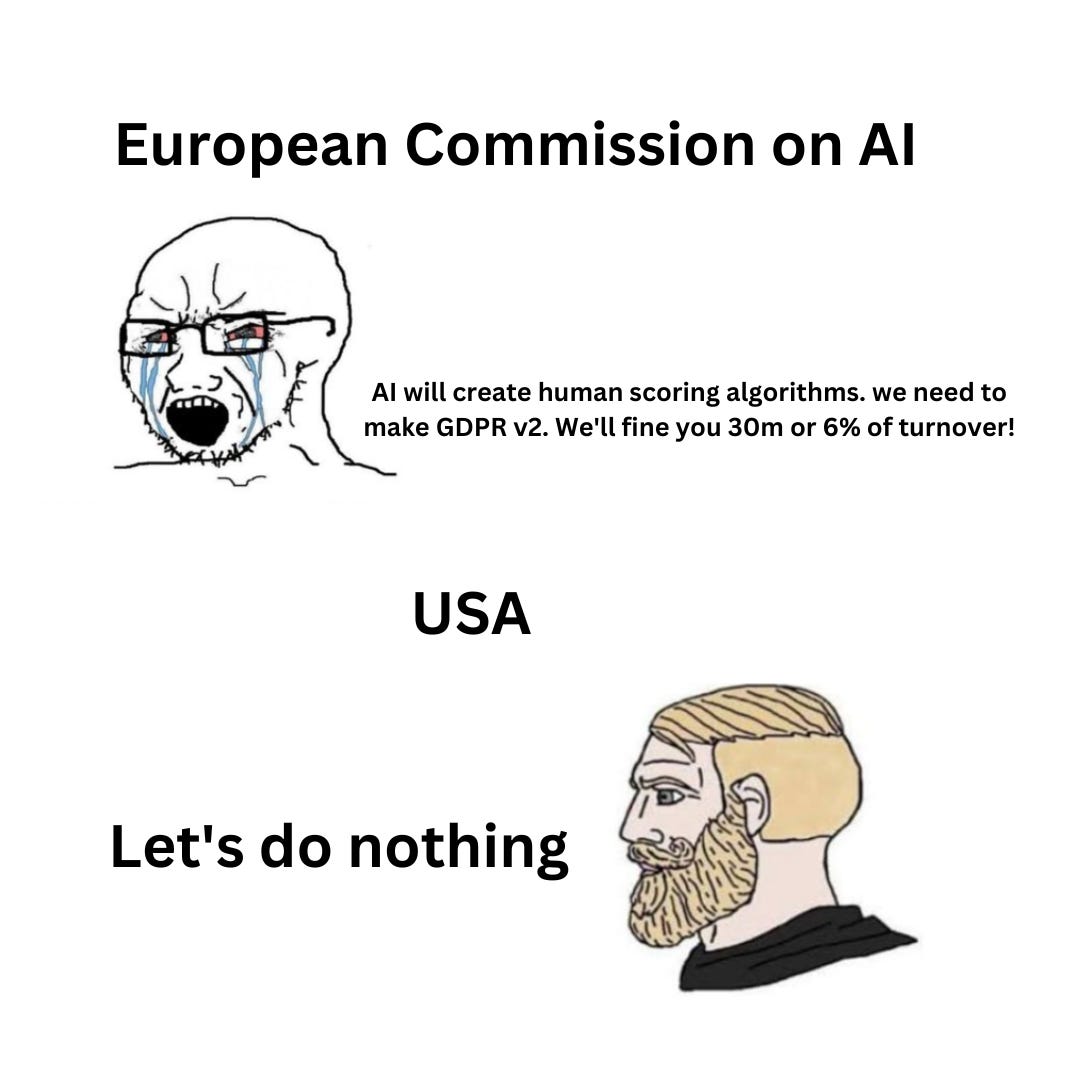

We’ve seen this before with GDPR vs. US privacy laws ——→ The EU jumps on it first because they love regulation. Bring in…… The EU AI Act

Resources for The EU AI Act

I KID, I KID!… kind of… On January 26, 2023, NIST released the AI Risk Management Framework (AI RMF 1.0) and a bunch of resources around it within the Trustworthy & Responsible AI Resource Center. The NIST AI RMF Playbook has granular controls you can use to start building out an AI Compliance Framework at your organization. Take a look at what’s available below.

Resources for NIST Trustworthy & Responsible AI Resource Center

What you can do now to be compliant:

The US will be miles behind formal AI regulation and company’s will have to use the EU AI Act as a baseline for figuring out what needs to be done on the regulation side — just like how GDPR was for ages. I predict this will be confusing, extremely vague, and that’s what regulation is all about. My solution???? SLAP A QUESTIONNAIRE ON IT and… monitor it.

*Disclaimer - I don’t work for Anecdotes. I don’t work for Whistic. I do not get paid by any products I mention in my blog. I am using the resources available to me to produce content for educational and entertainment purposes. If I DO get paid for any for any reason in the future, this information will be disclosed at the top of the post. I do not plan to accept payment from anyone for plugging products, as this blog is meant to be unbiased through the lens of a real GRC practitioner.

Anecdotes AI Framework

For now the best thing I can do is start tracking internal controls related to AI/LLM as these project develops at the company. That’s where Anecdotes AI Framework comes in. They have created the first stab at a control framework for managing AI/LLM compliance. They developed this in-house with the help of industry experts. Here is a PR Newswire article about it.

In my first analysis of the framework, it seems to pull control requirements that align with the OWASP Top 10 for LLM, the NIST AI RMF, and requirements related to The EU AI Act. Although it is not mapped 1 to 1 with these sources and I don’t believe it was designed as a mapping tool to those resources. It’s a GREAT place to start your journey in AI compliance.

Whistic CapAI Assessment: Questionnaire for comparing yourself to The EU AI Act:

The fine folks over at Whistic created a questionnaire to size yourself up to the EU AI Act, which is the first bandaid I’ve seen to answer “what are we doing to be compliant with AI”. Whistic’s questionnaire follows the CapAI Assessment which was created by the University of Oxford and calling itself “A procedure for conducting conformity assessment of AI systems in line with the EU Artificial Intelligence Act“.

This questionnaire maps the requirements listed in the official document: Section 4 Internal Review Protocol and lays it out nicely so you don’t need to read the 91 page document. If you want, you can read the full thing here.

You can use this guide to set up your AI/LLM Governance Compliance as these are the official requirements for assessing yourself against The EU AI Act.

Here’s what we’re working with:

Full CapAI Requirement Questionnaire:

Based off the official CapAI Procedure; Version 1.0, Published March, 23, 2022. Documentation requirements are highlighted in bold.

STAGE 1: Design

Organisational Governance:

Does the organization have a defined, documented set of values that guide the development of AI systems?

Documentation Requirements:

AI specific policy, outlining usage limitations and expectations

Acceptable Use Policy has guidelines for user interaction with AI

SDLC has secure coding guidelines for AI/LLM

Have these values been published or made available to external parties?

Have these values been published or made available to internal stakeholders?

Has a governance framework for AI projects been defined and documented?

Has the responsibility for ensuring and demonstrating AI systems adherence to defined organizational values been assigned?

Use Case:

Have the objectives of the AI application been defined and documented?

Has the AI application been assessed against documented ethical values?

Have performance criteria for the AI application been defined and documented?

Has the overall environmental impact for the AI application been assessed?

STAGE 2: Development

Data:

Has the data used to develop the AI application been inventoried and documented?

Has the data used to develop the AI application been checked for representativeness, relevance, accuracy, traceability, and completeness?

Have the risks identified in the data impact assessment been considered and addressed?

Has legal compliance with respect to data protection legislation been assessed (e.g. GDPR, CPRA)?

Model:

Has the source of the AI model been documented?

Has the selection of the model been assessed with regard to fairness, explainability, and robustness?

Have the risks identified in the model been documented, considered, and addressed?

Has the strategy for validating the model been defined and documented?

Has the organization documented AI performance in the training model?

Have hyperparameters been set and documented?

Does the model fulfill the established performance criteria levels?

STAGE 3: Evaluation

Test:

Has the strategy for testing the model been defined and documented?

Has the organization documented AI performance in the testing environment?

Has the model been tested for performance on extreme values and protected attributes?

Have patterns of failure been identified?

Have key failure modes been identified?

Does the model fulfill the established performance criteria levels?

Deploy:

Has the deployment strategy been documented?

Has the serving strategy been documented?

Have the risks associated with the given serving and deployment strategies been identified?

Have the risks associated with the given serving and deployment strategies been addressed?

Does the model fulfill the established performance criteria levels in the production environment?

STAGE 4: Operation

Have the risks associated with changing data quality and potential data drift been identified?

Have the risks associated with model decay been identified?

Has the strategy for monitoring and addressing risks associated with data quality, data drift, and model decay been defined and documented?

Does the organization periodically review the AI application(s) in regards to ethical values?

Does the organization have a strategy for updating the AI application(s) continuously?

Has a complaints process for users of the AI system to raise concerns or suggest improvements been established?

Has a problem-to-resolution process been defined and documented?

STAGE 5: Retirement

Have the risks of decommissioning the AI system been assessed and documented?

Has the strategy for addressing risks associated with decommissioning the AI system been documented?

Building out your AI Control Framework

Now that you have the requirements above in the form of questions, you can start crafting your policies and controls considerations. You can use a combination of all resources I mentioned in this post to start building out a compliance framework that works for your company, based off the risk that potential AI projects pose.

Recap of the resources available:

NIST AI Road Map